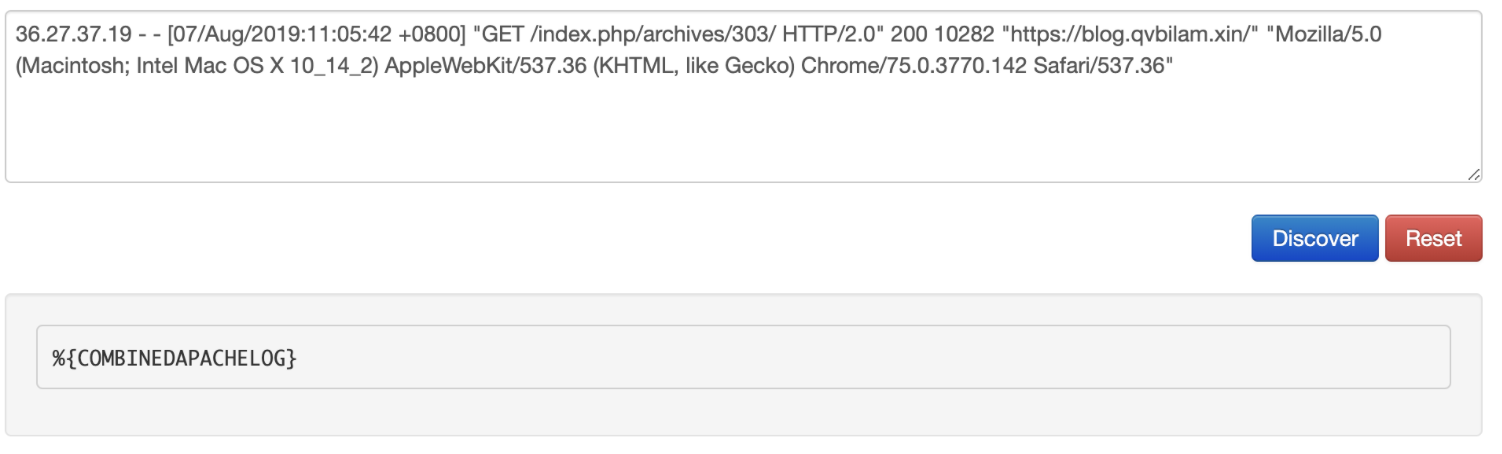

对于Nginx日志详细的解读以及配置在Filter文章中有过介绍.这里使用Grok debugger工具对Grok进行生成检测,为了方便哈哈哈.例如这样直接获取到了Grok.

Nginx日志解析

配置文件

# vim actual/nginx.conf

input{

http{

port => 8601

}

}

filter{

grok{

match => {

"message" => "%{COMBINEDAPACHELOG}"

}

}

mutate{

remove_field => ["headers"]

}

}

output{

stdout{

codec => rubydebug

}

}

# 启动

logstash -f actual/nginx.conf -r

# 访问

curl http://127.0.0.1:8601 -X POST -d '

quote> 36.27.37.19 - - [07/Aug/2019:11:05:42 +0800] "GET /index.php/archives/303/ HTTP/2.0" 200 10282 "https://blog.qvbilam.xin/" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_14_2) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.142 Safari/537.36"

quote> '

ok%

# 返回结果

{

"ident" => "-",

"httpversion" => "2.0",

"response" => "200",

"auth" => "-",

"timestamp" => "07/Aug/2019:11:05:42 +0800",

"verb" => "GET",

"@timestamp" => 2019-08-07T04:00:14.385Z,

"@version" => "1",

"message" => "\n36.27.37.19 - - [07/Aug/2019:11:05:42 +0800] \"GET /index.php/archives/303/ HTTP/2.0\" 200 10282 \"https://blog.qvbilam.xin/\" \"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_14_2) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.142 Safari/537.36\"\n",

"request" => "/index.php/archives/303/",

"agent" => "\"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_14_2) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.142 Safari/537.36\"",

"clientip" => "36.27.37.19",

"referrer" => "\"https://blog.qvbilam.xin/\"",

"host" => "127.0.0.1",

"bytes" => "10282"

} @timestamp字段是读取这条日志的时间,而timestamp是日志的时间,将timestamp替换成@timestamp.同时隐藏message,timestamp.并且将client用上geoip.解析agent.

# vim actual/nginx.conf

filter{

grok{

match => {

"message" => "%{COMBINEDAPACHELOG}"

}

}

# 设置新的字段

ruby{

code => "event.set('@old_timestamp',event.get('@timestamp'))"

}

# 将日志时间格式化成logstsh的时间格式

# 07/Aug/2019:11:05:42 +0800

date{

match => ['timestamp','dd/MMM/yyyy:HH:mm:ss Z']

}

# 删除字段

mutate{

remove_field => ["headers","timestamp","message"]

}

# ip获取信息

geoip{

source => 'clientip'

fields => ["country_name","city_name","region_name","latitude","longitude"]

}

#

useragent{

source => 'agent'

target => "useragent"

}

}结果展示

# 请求

➜ /Users/qvbilam/Study/logstash curl http://127.0.0.1:8601 -X POST -d '

36.27.37.19 - - [07/Aug/2019:11:05:42 +0800] "GET /index.php/archives/303/ HTTP/2.0" 200 10282 "https://blog.qvbilam.xin/" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_14_2) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.142 Safari/537.36"

'

ok%

# 返回结果

{

"@old_timestamp" => 2019-08-07T07:22:53.300Z,

"useragent" => {

"os_major" => "10",

"major" => "75",

"build" => "",

"name" => "Chrome",

"minor" => "0",

"device" => "Other",

"patch" => "3770",

"os_minor" => "14",

"os_name" => "Mac OS X",

"os" => "Mac OS X"

},

"ident" => "-",

"httpversion" => "2.0",

"response" => "200",

"auth" => "-",

"verb" => "GET",

"@timestamp" => 2019-08-07T03:05:42.000Z,

"@version" => "1",

"request" => "/index.php/archives/303/",

"geoip" => {

"region_name" => "Zhejiang",

"city_name" => "Hangzhou",

"latitude" => 30.294,

"longitude" => 120.1619,

"country_name" => "China"

},

"agent" => "\"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_14_2) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.142 Safari/537.36\"",

"clientip" => "36.27.37.19",

"referrer" => "\"https://blog.qvbilam.xin/\"",

"host" => "127.0.0.1",

"bytes" => "10282"

}输出ElasticSearch

通过刚才的一系列操作,成功的将一条日志解析出来.现在需要将某个文件内的数据都解析到ElastciSearch中.

# 获取nginx日志中的前100条输出到新的文件中

tail -n 100 blog.qvbilam.xin_nginx.log >> my_nginx_log.log修改配置文件

input{

#http{ port => 8601 }

file{

path => "/Users/qvbilam/Desktop/*.log"

start_position => "beginning"

#start_position => "end"

sincedb_path => "/usr/local/since"

}

}

filter{

grok{

match => {

"message" => "%{COMBINEDAPACHELOG}"

}

}

# 将日志时间格式化成logstsh的时间格式

# 07/Aug/2019:11:05:42 +0800

date{

match => ['timestamp','dd/MMM/yyyy:HH:mm:ss Z']

}

mutate{

# 删除字段

remove_field => ["headers","timestamp","message","agent",""]

# 设置隐藏字段 测试es索引名

add_field => { "[@metadata][index]" => "nginx_log_%{+YYYY.MM}" }

}

# ip获取信息

geoip{

source => 'clientip'

fields => ["country_name","city_name","region_name","latitude","longitude"]

}

# agent 解析

useragent{

source => 'agent'

target => "useragent"

}

}

output{

stdout{ codec => rubydebug }

elasticsearch{

hosts => ["127.0.0.1:8101"]

index => "nginx_log_%{+YYYY.MM.dd}"

}

}启动后自动执行写入.

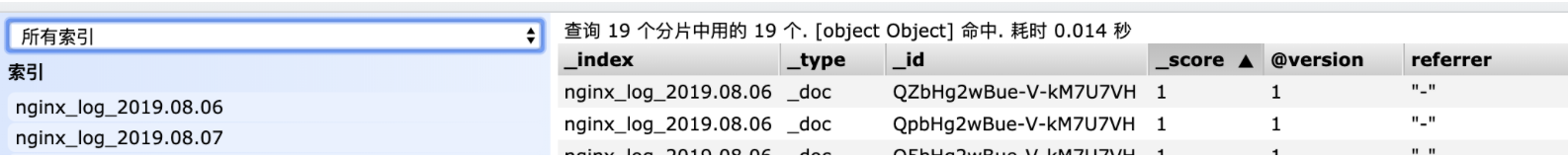

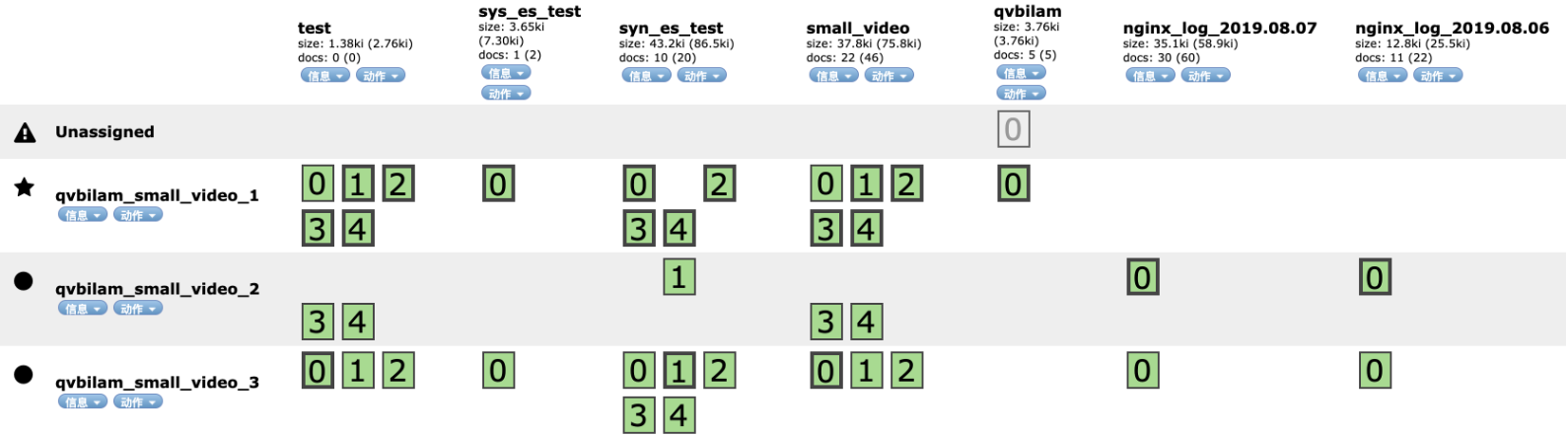

可以看到ElasticSearch创建了对应时间的索引日志.查看索引信息

查看了官方文档也没看到怎么在output中设置elasticsearch的索引分片副本数,对于负载问题可以在elasticsearch中建立动态索引模板,如果字段明确且确定建议建立静态索引模板.(这里的坑需要补elasticsearch的文了...)

Mysql日志解析

在Mysql中一共有五种日志,即错误日志,查询日志,慢查询日志,事务日志,二进制日志.查看日志目录

SHOW GLOBAL VARIABLES LIKE '%log%';

# 部分信息

+--------------------------------------------+-----------------------------------------------------+

| Variable_name | Value |

+--------------------------------------------+-----------------------------------------------------+

| # 是否开启满查询

| general_log | OFF | # 慢查询日志

| general_log_file | /data/mysql/iz2ze1epzqld1dhcl3itv5z.log | # 错误日志

| log_error | /data/mysql/mysql-error.log |

+--------------------------------------------+-----------------------------------------------------+慢查询日志

注意:mysql的版本不同日志的格式略有不同,例如5.7.25版本的就带着id.根据自己的场景来实践哈~

# 查看慢查询

show variables like 'slow_query%';

+---------------------+----------------------------------------------------------+

| Variable_name | Value |

+---------------------+----------------------------------------------------------+

| slow_query_log | OFF |

| slow_query_log_file | /usr/local/var/mysql/erhuadamowangdeMacBook-Pro-slow.log |

+---------------------+----------------------------------------------------------+

# 慢查询记录时间

show variables like 'long_query_time';

+-----------------+-----------+

| Variable_name | Value |

+-----------------+-----------+

| long_query_time | 10.000000 |

+-----------------+-----------+

1 row in set (0.01 sec)

# 开启慢查询.

set global slow_query_log='ON';

# 设置日志位置/名称

set global slow_query_log_file='/usr/local/var/mysql/slow.log';

# 设置慢查询记录时间(秒)

set global long_query_time=1;

# 重启mysql

brew services restart mysql

# 测试慢查询

select sleep(3);

# 重连mysql测试

select sleep(2);摘取部分日志

# Time: 2019-08-12T04:41:35.160487Z

# User@Host: root[root] @ localhost [] Id: 41799

# Query_time: 3.013489 Lock_time: 0.000000 Rows_sent: 1 Rows_examined: 0

SET timestamp=1565584895;

select sleep(3);

# Time: 2019-08-12T04:42:47.196016Z

# User@Host: root[root] @ localhost [] Id: 41802

# Query_time: 2.001562 Lock_time: 0.000000 Rows_sent: 1 Rows_examined: 0

SET timestamp=1565584967;

select sleep(2);

# Time: 160524 5:12:29

# User@Host: user_a[xxxx] @ [10.166.140.109] Id: 41799

# Query_time: 1.711086 Lock_time: 0.000040 Rows_sent: 385489 Rows_examined: 385489

use test;

SET timestamp=1464037949;

SELECT name from test;

# Time: 160524 5:12:29

# User@Host: user_a[xxxx] @ [10.166.140.109] Id: 41799

# Query_time: 1.711086 Lock_time: 0.000040 Rows_sent: 385489 Rows_examined: 385489

use test;

SET timestamp=1464037949;

SELECT id from test;

# Time: 160524 5:12:29

# User@Host: user_a[xxxx] @ [10.166.140.109] Id: 41799

# Query_time: 1.711086 Lock_time: 0.000040 Rows_sent: 385489 Rows_examined: 385489

use test;

SET timestamp=1464037949;

SELECT * from test;grok配置

# cd /usr/local/Cellar/logstash/vendor/bundle/jruby/2.5.0/gems/logstash-patterns-core-4.1.2/patterns

# vim mysql-slow

MYSQLSLOW (?m)^#\s+User@Host:\s+%{USER:user}\[[^\]]+\]\s+@\s+(?:(?<clienthost>\S*) )?\[(?:%{IPV4:clientip})?\]\s+Id:\s+%{NUMBER:row_id:int}\n#\s+Query_time:\s+%{NUMBER:query_time:float}\s+Lock_time:\s+%{NUMBER:lock_time:float}\s+Rows_sent:\s+%{NUMBER:rows_sent:int}\s+Rows_examined:\s+%{NUMBER:rows_examined:int}\n\s*(?:use %{DATA:database};\s*\n)?SET\s+timestamp=%{NUMBER:timestamp};\n\s*(?<sql>(?<action>\w+)\b.*;)\s*(?:\n#\s+Time)?.*$Logstash配置

input{

#http{ port => 8601 }

file{

path => "/Users/qvbilam/Sites/test/logstash/log/slow.log"

start_position => "beginning"

#start_position => "end"

sincedb_path => "/usr/local/since"

codec => multiline {

pattern => "^# User@Host:"

negate => true

what => "previous"

}

}

}

filter{

grok{

match => {

"message" => "%{MYSQLSLOW}"

}

}

# 字段配置

mutate{

remove_field => ["tags","message"]

add_field => { "[@metadata][tags]" => "%{tags}" }

}

}

output{

if ("_grokparsefailure" not in [@metadata][tags]) {

stdout{ codec => rubydebug }

# 关于写入elasticsearch 可以复制上面的output中的配置~

}

}解析结果

{

"rows_examined" => 0,

"path" => "/Users/qvbilam/Sites/test/logstash/log/slow.log",

"sql" => "select sleep(3);",

"user" => "root",

"rows_sent" => 1,

"clienthost" => "localhost",

"action" => "select",

"lock_time" => 0.0,

"query_time" => 3.013489,

"@timestamp" => 2019-08-13T03:11:11.396Z,

"row_id" => 41799,

"host" => "erhuadaangdeMBP",

"@version" => "1",

"timestamp" => "1565584895"

}

{

"rows_examined" => 0,

"path" => "/Users/qvbilam/Sites/test/logstash/log/slow.log",

"sql" => "select sleep(2);",

"user" => "root",

"rows_sent" => 1,

"clienthost" => "localhost",

"action" => "select",

"lock_time" => 0.0,

"query_time" => 2.001562,

"@timestamp" => 2019-08-13T03:11:11.399Z,

"row_id" => 41802,

"host" => "erhuadaangdeMBP",

"@version" => "1",

"timestamp" => "1565584967"

}

{

"rows_examined" => 385489,

"path" => "/Users/qvbilam/Sites/test/logstash/log/slow.log",

"sql" => "SELECT name from test;",

"user" => "user_a",

"rows_sent" => 385489,

"action" => "SELECT",

"lock_time" => 4.0e-05,

"clientip" => "10.166.140.109",

"query_time" => 1.711086,

"@timestamp" => 2019-08-13T03:11:11.400Z,

"row_id" => 41799,

"host" => "erhuadaangdeMBP",

"database" => "test",

"@version" => "1",

"timestamp" => "1464037949"

}

{

"rows_examined" => 385489,

"path" => "/Users/qvbilam/Sites/test/logstash/log/slow.log",

"sql" => "SELECT id from test;",

"user" => "user_a",

"rows_sent" => 385489,

"action" => "SELECT",

"lock_time" => 4.0e-05,

"clientip" => "10.166.140.109",

"query_time" => 1.711086,

"@timestamp" => 2019-08-13T03:11:11.402Z,

"row_id" => 41799,

"host" => "erhuadaangdeMBP",

"database" => "test",

"@version" => "1",

"timestamp" => "1464037949"

}错误日志

# 查看错误日志

show variables like 'log_error';

+---------------+-----------------------------+

| Variable_name | Value |

+---------------+-----------------------------+

| log_error | /data/mysql/mysql-error.log |

+---------------+-----------------------------+

1 row in set (0.00 sec)摘取部分日志

2019-03-23T08:47:08.041977Z 0 [Warning] 'user' entry 'root@localhost' ignored in --skip-name-resolve mode.

2019-03-23T08:47:08.042005Z 0 [Warning] 'user' entry 'mysql.session@localhost' ignored in --skip-name-resolve mode.

2019-03-23T08:47:08.042012Z 0 [Warning] 'user' entry 'mysql.sys@localhost' ignored in --skip-name-resolve mode.

2019-03-23T08:47:08.042027Z 0 [Warning] 'db' entry 'performance_schema mysql.session@localhost' ignored in --skip-name-resolve mode.

2019-03-23T08:47:08.042031Z 0 [Warning] 'db' entry 'sys mysql.sys@localhost' ignored in --skip-name-resolve mode.

2019-03-23T08:47:08.042038Z 0 [Warning] 'proxies_priv' entry '@ root@localhost' ignored in --skip-name-resolve mode.

2019-03-23T08:47:08.042200Z 0 [Warning] 'tables_priv' entry 'user mysql.session@localhost' ignored in --skip-name-resolve mode.

2019-03-23T08:47:08.042207Z 0 [Warning] 'tables_priv' entry 'sys_config mysql.sys@localhost' ignored in --skip-name-resolve mode.

2019-03-23T08:47:08.050072Z 0 [Note] Giving 0 client threads a chance to die gracefully

2019-03-23T08:47:08.050085Z 0 [Note] Shutting down slave threads

2019-03-23T08:47:08.050092Z 0 [Note] Forcefully disconnecting 0 remaining clients

2019-03-23T08:47:08.050096Z 0 [Note] Event Scheduler: Purging the queue. 0 events

2019-03-23T08:47:08.050226Z 0 [Note] Binlog end

2019-03-23T08:47:08.053473Z 0 [Note] Shutting down plugin 'ngram'

2019-03-23T08:47:08.053488Z 0 [Note] Shutting down plugin 'ARCHIVE'

2019-03-23T08:47:08.053492Z 0 [Note] Shutting down plugin 'partition'

2019-03-23T08:47:08.053495Z 0 [Note] Shutting down plugin 'BLACKHOLE'

2019-03-23T08:47:08.053500Z 0 [Note] Shutting down plugin 'PERFORMANCE_SCHEMA' 说明:因为错误日志每行格式相同,故使用Dissect用分隔符原理将日志解析.

Logstash配置

input{

file{

path => "/Users/qvbilam/Sites/test/logstash/log/mysql_error.log"

start_position => "beginning"

#start_position => "end"

sincedb_path => "/usr/local/since"

}

}

filter{

dissect{

mapping => { "message" => "%{log_time} %{number} [%{log_type}] %{content}" }

}

# 字段设置

mutate{

add_field => { "[@metadata][time]" => "%{log_time}"}

add_field => { "[@metadata][tags]" => "%{tags}"}

remove_field => ["message","@version","tags","host","log_time"]

}

# 将读取时间替换成日志时间

date{

match => ["[@metadata][time]",'ISO8601']

}

}

output{

if ("_grokparsefailure" not in [@metadata][tags]) {

stdout{ codec => rubydebug }

#stdout{ codec => rubydebug }

}

}解析结果

{

"content" => "'tables_priv' entry 'sys_config mysql.sys@localhost' ignored in --skip-name-resolve mode.",

"number" => "0",

"log_type" => "Warning",

"path" => "/Users/qvbilam/Sites/test/logstash/log/mysql_error.log",

"@timestamp" => 2019-03-23T08:47:08.042Z

}

{

"content" => "Giving 0 client threads a chance to die gracefully",

"number" => "0",

"log_type" => "Note",

"path" => "/Users/qvbilam/Sites/test/logstash/log/mysql_error.log",

"@timestamp" => 2019-03-23T08:47:08.050Z

}回滚日志

-- 想了想还是觉得别折腾这个了:)

-- PHP操作数据库回滚的时候自定义日志吧~