Install Fealbeat

curl -L -O https://artifacts.elastic.co/downloads/beats/auditbeat/auditbeat-7.1.0-darwin-x86_64.tar.gz

# 解压

tar xzvf auditbeat-7.1.0-darwin-x86_64.tar.gzcurl -L -O https://artifacts.elastic.co/downloads/beats/auditbeat/auditbeat-7.1.0-linux-x86_64.tar.gz

# 解压

tar xzvf auditbeat-7.1.0-linux-x86_64.tar.gzcp -r ./filebeat /usr/local/Cellar/filebeat/

vim ~/.zshrc

alias filebeat="/usr/local/Cellar/filebeat/filebeat"

# 立即生效

source ~/.zshrcFileBeat

FileBeat是对文件进行处理.其中包含两个主要部分:输入(input)和收集器(harvesters).这两部分一起进行工作,将处理后的数据发送到指定的输出(output).FileBeat保证事件至少传递到配置输出(output)一次.并且不会丢失数据.它将每个事件的传递状态储存在注册表文件中.

在已定义的输出被组织且未确认所有事件的情况下,FileBeat将继续尝试发送事件,知道输出(output)确认已接收到事件为止.如果FileBeat在发送事件的过程中关闭,将不会等待输出(output)确认后关闭.在重启后将会再次发送未确认的事件.可以保证每个事件至少发送一次.但会将事件重复输出.可通过配置shutdown_timeout设定在关闭钱等待特定的时间来接受确认.

harvesters

收集器(harvesters)负责读取单个文件的内容,逐行读取每个文件,然后将内容发送到输出(output).每一个文件启动一个收集器(harvesters),同时收集器(harvesters)负责打开和关闭文件,这也就意味着收集器在运行时文件保持打开状态.如果在收集的时候文件进行删除或重命名,filebeat继续读取文件.这样做的副作用时磁盘上的空间将保留到(harvesters)关闭为止.当将关闭的(读取完)文件,大小更改.会重新进去读取.

Input

输入(input)负责管理收集器(harvesters)并查找所有可读取的资源.如果输入类型(type)定为log,输入(input)将查找定义的文件路径下所匹配的所有文件,并为每个文件启动收集器(harvesters).每个输入(input)都在自己的例程中运行.

Input支持类型

# 读取redis

filebeat.input:

- type: redis

hosts: ["localhost:6379"]

password: "${redis_pwd}"

scan_frequency : 10 # 扫描redis的频率,默认10s

timeout: 1 # 在输入返回错误之前等待Redis响应时间,默认为1s

enabled: true # 使用启用选项,默认true

# 自定义字段

fields:

appid: 123

name: qvbilam

# 读取日志

filebeat.inputs:

- type: log

paths:

- /var/log/messages

- /var/log/*.logOutput

Filebeat Output支持的类型:

# 输出调试

output.console:

pretty: true

# 输出到 Elasticsearch

output.elasticsearch:

hosts: ["http://127.0.0.1:8101"]

username: "如果开启了es验证则需要输入"

password: "如果开启了es验证则需要输入"

# 输出到 Logstash

output.elasticsearch:

hosts: ["http://127.0.0.1:8101"]

# 输出到 Redis

output.redis:

hosts: ["localhost:6379"]

password: "my_password"

key: "filebeat"

db: 0

timeout: 5Demo

Nginx Log

# vim nginx.log

123.182.247.180 - - [18/Nov/2019:08:39:39 +0800] "GET /usr/plugins/HoerMouse/static/image/sketch/normal.cur HTTP/2.0" 200 4286 "https://blog.qvbilam.xin/" "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.90 Safari/537.36"

123.182.247.180 - - [18/Nov/2019:08:39:39 +0800] "GET /usr/plugins/HoerMouse/static/image/sketch/link.cur HTTP/2.0" 200 4286 "https://blog.qvbilam.xin/" "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.90 Safari/537.36"

103.219.184.76 - - [18/Nov/2019:09:26:57 +0800] "GET /index.php/start-page.html HTTP/2.0" 200 3654 "https://blog.qvbilam.xin/index.php/archives/69/" "Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.97 Safari/537.36"

103.219.184.76 - - [18/Nov/2019:09:27:02 +0800] "GET / HTTP/2.0" 200 4471 "https://blog.qvbilam.xin/index.php/start-page.html" "Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.97 Safari/537.36"

101.251.238.50 - - [18/Nov/2019:09:55:29 +0800] "GET / HTTP/1.1" 400 264 "-" "Mozilla/5.0 (Windows NT 6.1; WOW64; rv:52.0) Gecko/20100101 Firefox/52.0"

184.105.247.196 - - [18/Nov/2019:10:39:44 +0800] "GET / HTTP/1.1" 200 17139 "-" "-"Filebeat Conf

filebeat.inputs:

- type: stdin

output.console:

pretty: true # 友好的格式化输出Echo

tail -n 10 ./log/nginx.log | filebeat -e -c ./conf/nginx.yml

# 其中一条

{

"@timestamp": "2019-11-18T04:54:33.794Z",

"@metadata": {

"beat": "filebeat",

"type": "_doc",

"version": "7.1.1"

},

"host": {

"name": "erhuadaangdeMBP"

},

"agent": {

"version": "7.1.1",

"type": "filebeat",

"ephemeral_id": "daa85e43-925c-4aa2-9174-6ade5a15a5fc",

"hostname": "erhuadaangdeMBP",

"id": "ec2ec44f-140c-491f-ba06-668b7f3de800"

},

"log": {

"offset": 0,

"file": {

"path": ""

}

},

"message": "123.182.247.180 - - [18/Nov/2019:08:39:39 +0800] \"GET /usr/plugins/HoerMouse/static/image/sketch/normal.cur HTTP/2.0\" 200 4286 \"https://blog.qvbilam.xin/\" \"Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.90 Safari/537.36\"",

"input": {

"type": "stdin"

},

"ecs": {

"version": "1.0.0"

}

}Question

# 去吃了个饭回来发现filbeat出现好多下列提示信息

INFO [monitoring] log/log.go:144 Non-zero metrics in the last 30

# 解决:在查询和输出加上enabled: true 参数.

# 放他娘的狗屁!默认就是true! The default value is true.解释

2019-11-18T12:10:30.392+0800 INFO [monitoring] log/log.go:144 Non-zero metrics in the last 30s {"monitoring": {"metrics": {"beat":{"cpu":{"system":{"ticks":26,"time":{"ms":3}},"total":{"ticks":52,"time":{"ms":6},"value":52},"user":{"ticks":26,"time":{"ms":3}}},"info":{"ephemeral_id":"4c6ae525-bc7c-475c-82f3-b5ca2a30787b","uptime":{"ms":60044}},"memstats":{"gc_next":4194304,"memory_alloc":3822472,"memory_total":7593360,"rss":-3383296}},"filebeat":{"harvester":{"open_files":-1,"running":0}},"libbeat":{"config":{"module":{"running":0}},"pipeline":{"clients":0,"events":{"active":0}}},"registrar":{"states":{"current":0}},"system":{"load":{"1":2.1255,"15":2.1221,"5":2.2031,"norm":{"1":0.1771,"15":0.1768,"5":0.1836}}}}}}转成Json方便观赏

可以看到monitoring/metrics下面主要包含beat,filebeat,libbeat,registrar和system.其中monitoring.metrics.beat.memstats.memory_alloc越多占用内存越大.

{

"monitoring":{

"metrics":{

"beat":{

"cpu":{

"system":{

"ticks":26,

"time":{

"ms":3

}

},

"total":{

"ticks":52,

"time":{

"ms":6

},

"value":52

},

"user":{

"ticks":26,

"time":{

"ms":3

}

}

},

"info":{

"ephemeral_id":"4c6ae525-bc7c-475c-82f3-b5ca2a30787b",

"uptime":{

"ms":60044

}

},

"memstats":{

"gc_next":4194304,

"memory_alloc":3822472,

"memory_total":7593360,

"rss":-3383296

}

},

"filebeat":{

"harvester":{

"open_files":-1,

"running":0

}

},

"libbeat":{

"config":{

"module":{

"running":0

}

},

"pipeline":{

"clients":0,

"events":{

"active":0

}

}

},

"registrar":{

"states":{

"current":0

}

},

"system":{

"load":{

"1":2.1255,

"5":2.2031,

"15":2.1221,

"norm":{

"1":0.1771,

"5":0.1836,

"15":0.1768

}

}

}

}

}

}Practice

通过Filebeat将日志文件发送到Logstash进行处理后转发到ElasticSearch.

注意:我将logstash和filebeat设置了全局,所以在下面的演习中可以直接使用.

alias logstash="/usr/local/Cellar/logstash/bin/logstash"

alias filebeat="/usr/local/Cellar/filebeat/filebeat"Filebeat To Logstash

Filebeat

# 编辑配置

vim conf/nginx.conf

# 通过读取日志的方式

filebeat.inputs:

- type: log

paths:

- /Users/qvbilam/Sites/study/logstash/log/my_nginx_log.log

output.logstash:

hosts: ["127.0.0.1:7001"]

# 启动服务

filebeat -e -c conf/nginx.conf # 创建的配置文件当前路径.Logstash

Error

# 错误一: 关闭了logstash后重启logstash. filebeat连接不上.

ERROR logstash/async.go:256 Failed to publish events caused by: write tcp 127.0.0.1:65029->127.0.0.1:7001: write: broken pipe

ERROR pipeline/output.go:121 Failed to publish events: write tcp 127.0.0.1:65029->127.0.0.1:7001: write: broken pipe

# 解决一: 关闭logstash/filebeat. 先启动logstash后启动filebeat错误一消失,出现错误二

# 错误二: 没啥问题无影响

WARN beater/filebeat.go:357 Filebeat is unable to load the Ingest Node pipelines for the configured modules because the Elasticsearch output is not configured/enabled. If you have already loaded the Ingest Node pipelines or are using Logstash pipelines, you can ignore this warning.Notice

关于Filebeat向Logstash输出重复数据的问题:在测试的时候通过vim的方式向文件追加日志,导致了出现重复数据问题.是因为vim修改文件会导致文件的索引变更,使Filebeat记录的节点与文件的索引不符,

解决:使用echo 'xxx' >> log/my_nginx_log.log成功解决.

Test

# 1-1 urlcode 编码问题

cd logstash/bin

# 1-2 安装插件

./logstash-plugin install logstash-filter-urldecode

# 1-3 提示安装成功

Validating logstash-filter-urldecode

Installing logstash-filter-urldecode

Installation successful

# 2-1 特殊字符转译

修改配置文件中

vim logstash/config/logstash.yml

config.support_escapes: true配置

# 编辑配置

vim filebeat.conf

input{

beats{

port => 7001

}

}

output{

stdout{

codec => rubydebug

}

}

# 启动服务

logstash -f filebeat.conf # 我在当前配置文件路径下直接启动的

# logstash 输出内容

{

"@version" => "1",

"log" => {

"offset" => 7425,

"file" => {

"path" => "/Users/qvbilam/Sites/study/logstash/log/my_nginx_log.log"

}

},

"agent" => {

"type" => "filebeat",

"ephemeral_id" => "51c988b9-e14e-4826-ad8b-edfb709d63ef",

"id" => "ec2ec44f-140c-491f-ba06-668b7f3de800",

"version" => "7.1.1",

"hostname" => "erhuadaangdeMBP"

},

"message" => "36.27.37.19 - - [07/Aug/2019:15:34:20 +0800] \"GET /admin/login.php?referer=https%3A%2F%2Fblog.qvbilam.xin%2Fadmin%2F HTTP/2.0\" 200 2198 \"-\" \"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_14_2) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.142 Safari/537.36\"",

"@timestamp" => 2019-11-18T09:19:53.307Z,

"host" => {

"name" => "erhuadaangdeMBP"

},

"ecs" => {

"version" => "1.0.0"

},

"tags" => [

[0] "beats_input_codec_plain_applied"

],

"input" => {

"type" => "log"

}

}Logstash Process

input{

beats{

port => 7001

}

}

filter{

grok{

match => {

"message" => "%{COMBINEDAPACHELOG}"

}

}

# 将日志时间格式化成logstsh的时间格式

# 07/Aug/2019:11:05:42 +0800

date{

match => ['timestamp','dd/MMM/yyyy:HH:mm:ss Z']

}

mutate{

# 删除字段

remove_field => ["headers","timestamp","message","agent","tags","@version","ecs","longitude","offset"]

# 设置隐藏字段 测试es索引名

add_field => { "[@metadata][index]" => "nginx_log_%{+YYYY.MM}" }

}

# ip获取信息

geoip{

source => 'clientip'

fields => ["country_name","city_name","region_name","latitude","longitude"]

}

# agent 解析

useragent{

source => 'agent'

target => "useragent"

}

# urldecode 解码

urldecode{

all_fields => true

}

}

output{

stdout{ codec => rubydebug }

elasticsearch{

hosts => ["127.0.0.1:8101"]

index => "filebeat_nginx_log_%{+YYYY.MM.dd}"

}

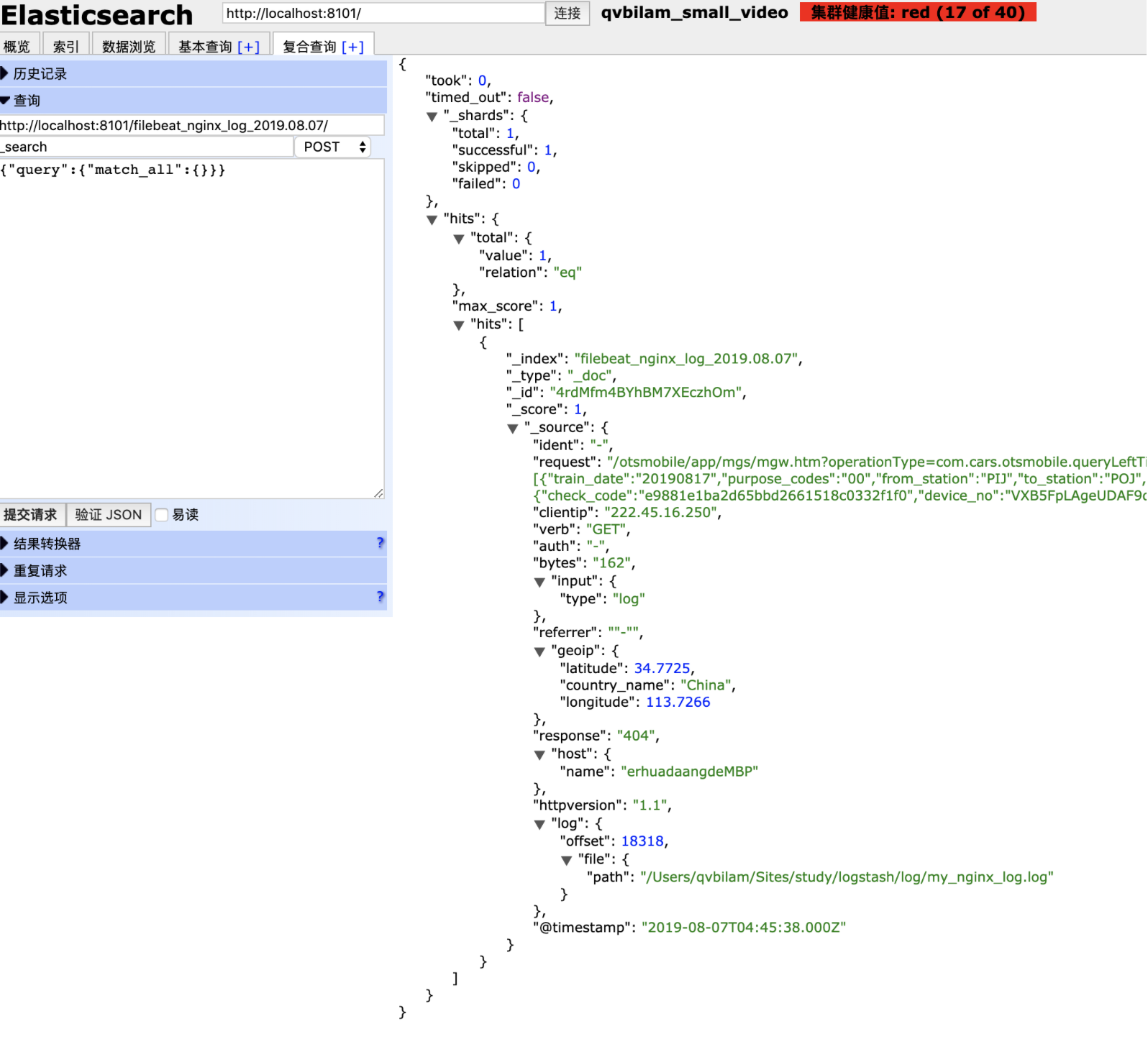

}ElasticSearch

# 启动ES.端口号8101

# 启动ES-head. 端口号8000

cd elasticsearch-head

npm run start

# 启动logstash

logstash -f filebeat.conf

# 启动filebeat

filebeat -e -c conf/nginx.yml

# 输出日志

echo '222.45.16.250 - - [07/Aug/2019:12:45:38 +0800] "GET /otsmobile/app/mgs/mgw.htm?operationType=com.cars.otsmobile.queryLeftTicket&requestData=%5B%7B%22train_date%22%3A%2220190817%22%2C%22purpose_codes%22%3A%2200%22%2C%22from_station%22%3A%22PIJ%22%2C%22to_station%22%3A%22POJ%22%2C%22station_train_code%22%3A%22%22%2C%22start_time_begin%22%3A%220000%22%2C%22start_time_end%22%3A%222400%22%2C%22train_headers%22%3A%22QB%23%22%2C%22train_flag%22%3A%22%22%2C%22seat_type%22%3A%22%22%2C%22seatBack_Type%22%3A%22%22%2C%22ticket_num%22%3A%22%22%2C%22dfpStr%22%3A%22%22%2C%22baseDTO%22%3A%7B%22check_code%22%3A%22e9881e1ba2d65bbd2661518c0332f1f0%22%2C%22device_no%22%3A%22VXB5FpLAgeUDAF9qiX5olHvl%22%2C%22mobile_no%22%3A%22%22%2C%22os_type%22%3A%22a%22%2C%22time_str%22%3A%2220190807124538%22%2C%22user_name%22%3A%22%22%2C%22version_no%22%3A%224.2.10%22%7D%7D%5D&ts=1565153138755&sign= HTTP/1.1" 404 162 "-" "Go-http-client/1.1"' >> log/my_nginx_log.logFilebeat Echo

2019-11-18T19:36:20.947+0800 INFO crawler/crawler.go:106 Loading and starting Inputs completed. Enabled inputs: 1

2019-11-18T19:36:30.957+0800 INFO log/harvester.go:254 Harvester started for file: /Users/qvbilam/Sites/study/logstash/log/my_nginx_log.log

2019-11-18T19:36:31.967+0800 INFO pipeline/output.go:95 Connecting to backoff(async(tcp://127.0.0.1:7001))

2019-11-18T19:36:31.972+0800 INFO pipeline/output.go:105 Connection to backoff(async(tcp://127.0.0.1:7001)) establishedLogstash Echo

{

"ident" => "-",

"request" => "/otsmobile/app/mgs/mgw.htm?operationType=com.cars.otsmobile.queryLeftTicket&requestData=[{\"train_date\":\"20190817\",\"purpose_codes\":\"00\",\"from_station\":\"PIJ\",\"to_station\":\"POJ\",\"station_train_code\":\"\",\"start_time_begin\":\"0000\",\"start_time_end\":\"2400\",\"train_headers\":\"QB#\",\"train_flag\":\"\",\"seat_type\":\"\",\"seatBack_Type\":\"\",\"ticket_num\":\"\",\"dfpStr\":\"\",\"baseDTO\":{\"check_code\":\"e9881e1ba2d65bbd2661518c0332f1f0\",\"device_no\":\"VXB5FpLAgeUDAF9qiX5olHvl\",\"mobile_no\":\"\",\"os_type\":\"a\",\"time_str\":\"20190807124538\",\"user_name\":\"\",\"version_no\":\"4.2.10\"}}]&ts=1565153138755&sign=",

"clientip" => "222.45.16.250",

"verb" => "GET",

"auth" => "-",

"bytes" => "162",

"input" => {

"type" => "log"

},

"referrer" => "\"-\"",

"geoip" => {

"latitude" => 34.7725,

"country_name" => "China",

"longitude" => 113.7266

},

"response" => "404",

"host" => {

"name" => "erhuadaangdeMBP"

},

"httpversion" => "1.1",

"log" => {

"offset" => 18318,

"file" => {

"path" => "/Users/qvbilam/Sites/study/logstash/log/my_nginx_log.log"

}

},

"@timestamp" => 2019-08-07T04:45:38.000Z

}ElasticSearch Echo

[2019-11-18T19:30:47,157][INFO ][o.e.l.LicenseService ] [qvbilam_small_video_1] license [d2b1dee8-d297-439c-b0c3-c09e18e105cd] mode [basic] - valid

[2019-11-18T19:30:47,170][INFO ][o.e.g.GatewayService ] [qvbilam_small_video_1] recovered [7] indices into cluster_state

[2019-11-18T19:30:47,298][INFO ][o.w.a.d.Monitor ] [qvbilam_small_video_1] try load config from /Users/qvbilam/Sites/study/ElasticSearch/elasticsearch-7.1.0/config/analysis-ik/IKAnalyzer.cfg.xml

[2019-11-18T19:30:47,299][INFO ][o.w.a.d.Monitor ] [qvbilam_small_video_1] try load config from /Users/qvbilam/Sites/study/ElasticSearch/elasticsearch-7.1.0/plugins/ik/config/IKAnalyzer.cfg.xml

[2019-11-18T19:36:32,938][INFO ][o.e.c.m.MetaDataCreateIndexService] [qvbilam_small_video_1] [filebeat_nginx_log_2019.08.07] creating index, cause [auto(bulk api)], templates [], shards [1]/[1], mappings []

[2019-11-18T19:36:33,234][INFO ][o.e.c.m.MetaDataMappingService] [qvbilam_small_video_1] [filebeat_nginx_log_2019.08.07/Ki1Nef1vSWa3sBry7bKxFw] create_mapping [_doc]Success