MHA简介

MHA(Master High Availability)在主从复制架构中完成故障切换,在所有的从库中选取新的主库,并高效的将其他从库与新的主库进行主从切换.在MHA架构中支持日志点的复制方式,同时也支持GTID方式.

MHA功能

- 只监控主数据库.

- 主库宕机后,在从库中选取新的主库,并从宕机的主库中保存二进制日志传输到从服务器中(不一定会成功,如获取不到二进制文件,只进行故障转移.).

- 自动主从切换,故障转移.

MHA流程

集群内所有主机相互之间都需要SSH免认证登录

- 对主库进行监控.

- 当主库出现故障,通过ssh方式保存主库的二进制文件.

- 在从库群中选取最新更新的从库来做为新的主备库(可以设置部分从库不参与选举).

- 将新选的主备库与其他从库进行同步(在主库宕机后从库间可能会产生差异,为了将新的集群数据同步).

- 新主备库会保存旧的主库二进制日志(能通过SSH在旧主库获取到二进制文件).如出现主键重复等错误停止故障转移.

- 将主备库专程新的主库.

- 将从库重新设置复制的主库.

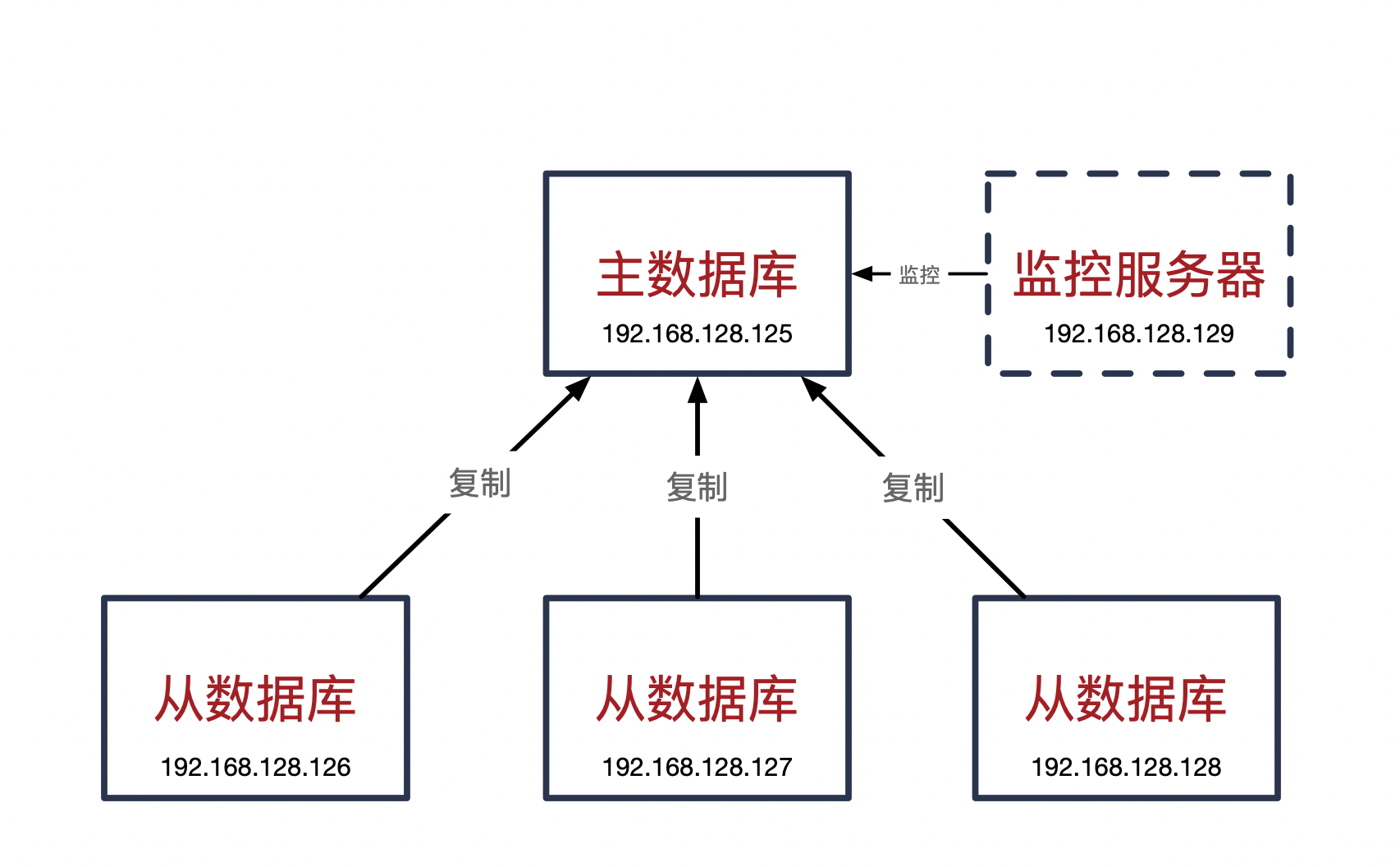

集群介绍

| 服务器 | 功能 | server-id(my.cnf配置,不可重复) |

|---|---|---|

| 192.168.128.125 | 主数据库 | 11 |

| 192.168.128.126 | 从数据库 | 12 |

| 192.168.128.127 | 从数据库 | 13 |

| 192.168.128.128 | 从数据库 | 14 |

| 192.168.128.129 | 监控服务器 | 15(可以不设置) |

免认证登录

每台服务器都要设置,包括本机

以主数据库(125)为列

# 生成密钥,一直回车即可

ssh-keygen

# 将密钥拷贝到其余的服务器中

ssh-copy-id -i /root/.ssh/id_rsa -p 22 root@192.168.128.125

ssh-copy-id -i /root/.ssh/id_rsa -p 22 root@192.168.128.126

ssh-copy-id -i /root/.ssh/id_rsa -p 22 root@192.168.128.127

ssh-copy-id -i /root/.ssh/id_rsa -p 22 root@192.168.128.128

ssh-copy-id -i /root/.ssh/id_rsa -p 22 root@192.168.128.129

# 在125中使用下面命令登录每台服务器(包括自己),不要看返回信息.如不用密码登录其他服务器则成功

ssh root@192.168.128.125

ssh root@192.168.128.126

ssh root@192.168.128.127

ssh root@192.168.128.128

ssh root@192.168.128.129主从复制

my.cnf

基于GTID复制演示

vi /etc/my.cnf

# 修改server-id为不重复,添加gtid_mode

server-id = 15

gtid_mode = on

enforce_gtid_consistency = on

master_info_repository = TABLE

relay_log_info_repository = TABLE验证

修改配置后重启mysql服务后进入mysql验证

show variables like '%gtid%';

+----------------------------------+-----------+

| Variable_name | Value |

+----------------------------------+-----------+

| binlog_gtid_simple_recovery | ON |

| enforce_gtid_consistency | ON |

| gtid_executed_compression_period | 1000 |

| gtid_mode | ON |

| gtid_next | AUTOMATIC |

| gtid_owned | |

| gtid_purged | |

| session_track_gtids | OFF |

+----------------------------------+-----------+

8 rows in set (0.01 sec)主库

创建主从复制账号

# 创建主从复制账号

create user qvbilam_copy@'192.168.128.%' identified by 'qvbilam';

# 赋予slave权限

grant replication slave on *.* to 'qvbilam_copy'@'192.168.128.%';

# 重新加载权限

FLUSH PRIVILEGES;同步数据

# 备份所有数据库

mysqldump --single-transaction --set-gtid-purged=OFF --master-data -A -uroot -p >> /data/back.sql

# 纯属到每台从库中

scp /data/back.sql root@192.168.128.126:/data

scp /data/back.sql root@192.168.128.127:/data

scp /data/back.sql root@192.168.128.128:/data

# 从服务器加载数据启动

mysql -uroot -p < /data/back.sql从库

change master to

master_host='192.168.128.125',

master_user='qvbilam_copy',

master_password='qvbilam',

master_auto_position=1;

start slave;

show slave status \G;

# 当Slave_IO_Running和Slave_SQL_Running为yes算成功MHA安装

安装MHA-node

# 安装依赖包

yum install -y perl-DBD-MySQL ncftp perl-DBI.x86_64

# 如果出现没有软件包需要修改yum镜像源

yum remove -y epel-release && yum install epel-release -y && yum clean all && yum makecache && yum install -y perl-DBD-MySQL ncftp perl-DBI.x86

# 安装node

rpm -ivh mha4mysql-node-0.58-0.el7.centos.noarch.rpm

准备中... ################################# [100%]

正在升级/安装...

1:mha4mysql-node-0.58-0.el7.centos ################################# [100%]安装MHA-manager

# 安装依赖包

yum install -y perl-DBD-MySQL ncftp perl-DBI.x86_64 perl-Parallel* perl-Log-Dispatch* gd-devel perl-log* perl-Time*

# 如果出现没有软件包需要修改yum镜像源,直接执行这步安装依赖包即可

yum remove -y epel-release && yum install epel-release -y && yum clean all && yum makecache && yum install -y perl-DBD-MySQL ncftp perl-DBI.x86_64 perl-Parallel* perl-Log-Dispatch* gd-devel perl-log* perl-Time*

# 安装manager

rpm -ivh mha4mysql-manager-0.58-0.el7.centos.noarch.rpm

准备中... ################################# [100%]

正在升级/安装...

1:mha4mysql-manager-0.58-0.el7.cent################################# [100%] MHA配置

管理账号

-- 主库创建管理账号,从库会自动复制

grant all privileges on *.* to qvbilam_mha@'192.168.128.%' identified by 'qvbilam';

-- 在从库上验证是否创建账号

use mysql

select user,host from user where user='qvbilam_mha';

+-------------+---------------+

| user | host |

+-------------+---------------+

| qvbilam_mha | 192.168.128.% |

+-------------+---------------+

1 row in set (0.01 sec)

-- 顺便看一眼权限是否为全部

show grants for 'qvbilam_mha'@'192.168.128.%';

+--------------------------------------------------------------+

| Grants for qvbilam_mha@192.168.128.% |

+--------------------------------------------------------------+

| GRANT ALL PRIVILEGES ON *.* TO 'qvbilam_mha'@'192.168.128.%' |

+--------------------------------------------------------------+

1 row in set (0.00 sec)

# 记录bin-log日志存放目录

show variables like '%log_bin%';

+---------------------------------+-----------------------------+

| Variable_name | Value |

+---------------------------------+-----------------------------+

| log_bin | ON |

| log_bin_basename | /data/mysql/mysql-bin |

| log_bin_index | /data/mysql/mysql-bin.index |

| log_bin_trust_function_creators | OFF |

| log_bin_use_v1_row_events | OFF |

| sql_log_bin | ON |

+---------------------------------+-----------------------------+

6 rows in set (0.07 sec)

# 也就是log_bin_basename的值配置文件

基于GTID复制演示

# 创建mha工作目录 自定义

mkdir -p /data/mysql-mha

# 创建配置目录和工作目录

mkdir -p /etc/mha

# 创建mha配置

cd /etc/mha

touch mha.cnf

vi /etc/mha/mha.cnf配置内容

password=qvbilam

[server default]

user=qvbilam_mha

password=qvbilam

# 工作目录

manager_workdir=/data/mysql-mha

# 日志

manager_log=/data/mysql-mha/mha.log

# 远程服务器工作目录

remote_workdir=

# ssh登录用户

ssh_user=root

[server default]

# MySQL中管理mha密码账号

user=qvbilam_mha

# MySQL中管理mha密码

password=qvbilam

# SSH免认证登录的账号

ssh_user=root

# Mysql主从复制账号

repl_user=qvbilam_copy

# MySQL主从复制密码

repl_password=qvbilam

# mha工作目录

manager_workdir=/data/mysql-mha

# mha日志存放路径

manager_log=/data/mysql-mha/mha.log

# 远程工作目录,在从库上都需要创建好这个目录

remote_workdir=/data/mysql-mha

# 监测主库的间隔,以秒为单位

ping_interval=1

# mysql主库二进制文件目录

master_binlog_dir=/data/mysql/mysql-bin

[server1]

hostname=192.168.128.125

# 参与选举(故障转移)

candidate_master=1

[server2]

hostname=192.168.128.126

# 参与选举(故障转移)

candidate_master=1

[server3]

hostname=192.168.128.127

# 参与选举(故障转移)

candidate_master=1

[server4]

hostname=192.168.128.128

# 不参与选举

no_master=1验证

ssh免密登录

masterha_check_ssh --conf=/etc/mha/mha.cnf

# 直到最后一条返回successfully算是成功,如下

Tue Feb 11 03:04:12 2020 - [debug] Connecting via SSH from root@192.168.128.128(192.168.128.128:22) to root@192.168.128.125(192.168.128.125:22)..

Tue Feb 11 03:04:23 2020 - [debug] ok.

Tue Feb 11 03:04:23 2020 - [debug] Connecting via SSH from root@192.168.128.128(192.168.128.128:22) to root@192.168.128.126(192.168.128.126:22)..

Tue Feb 11 03:04:34 2020 - [debug] ok.

Tue Feb 11 03:04:34 2020 - [debug] Connecting via SSH from root@192.168.128.128(192.168.128.128:22) to root@192.168.128.127(192.168.128.127:22)..

Tue Feb 11 03:04:44 2020 - [debug] ok.

Tue Feb 11 03:04:45 2020 - [info] All SSH connection tests passed successfully.主从复制

masterha_check_repl --conf=/etc/mha/mha.cnf

# 返回下面结果为成功

Tue Feb 11 03:06:46 2020 - [info] Checking replication health on 192.168.128.126..

Tue Feb 11 03:06:46 2020 - [info] ok.

Tue Feb 11 03:06:46 2020 - [info] Checking replication health on 192.168.128.127..

Tue Feb 11 03:06:46 2020 - [info] ok.

Tue Feb 11 03:06:46 2020 - [info] Checking replication health on 192.168.128.128..

Tue Feb 11 03:06:46 2020 - [info] ok.

Tue Feb 11 03:06:46 2020 - [warning] master_ip_failover_script is not defined.

Tue Feb 11 03:06:46 2020 - [warning] shutdown_script is not defined.

Tue Feb 11 03:06:46 2020 - [info] Got exit code 0 (Not master dead).

# 注意提示这里少2个脚本.将在脚本2级栏目进行汇总脚本

脚本下载地址

主从切换后漂移虚IP脚本

# 在主库上执行一次添加虚拟ip后,主库宕机后会自动将虚拟ip转移到新的主库上

ifconfig ens33:1 192.168.128.25/24

# vi /etc/mha/mha.cnf

# mha配置参数

master_ip_failover_script= 脚本路径

# 示例

master_ip_failover_script=/etc/mha/scripts/master_ip_failover注意脚本需要修改的内容

my $vip = 定义的虚拟IP

# 例如 my $vip = '192.168.128.25/24';

my $ssh_start_vip 和 my $ssh_stop_vip根据网卡设置

# 例如查看网卡

ifconfig

# 获得为的网卡为ens33.

ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

# 示例

my $ssh_start_vip = "/sbin/ifconfig ens33:$key $vip";

my $ssh_stop_vip = "/sbin/ifconfig ens33:$key down";邮件报警脚本

# vi /etc/mha/mha.cnf

# 示例

report_script=report_script=/etc/mha/scripts/send_report结果演示

查看主库虚拟ip

[root@localhost ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:d9:5b:44 brd ff:ff:ff:ff:ff:ff

inet 192.168.128.125/24 brd 192.168.128.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.128.25/24 brd 192.168.128.255 scope global secondary ens33:1

valid_lft forever preferred_lft forever

inet6 fe80::e38:e0fe:ec0e:5d2/64 scope link noprefixroute

valid_lft forever preferred_lft forever监控服务器启动mha

# 监控服务器后台运行mha

[root@localhost ~]# nohup masterha_manager --conf=/etc/mha/mha.cnf &

# 查看状态

[root@localhost ~]# masterha_check_status --conf=/etc/mha/mha.cnf

mha (pid:20786) is running(0:PING_OK), master:192.168.128.125查看主库ip

在主库上设置了"ifconfig 网卡:ip/24"才会有

[root@localhost ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:d9:5b:44 brd ff:ff:ff:ff:ff:ff

inet 192.168.128.125/24 brd 192.168.128.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.128.25/24 brd 192.168.128.255 scope global secondary ens33:1

valid_lft forever preferred_lft forever

inet6 fe80::e38:e0fe:ec0e:5d2/64 scope link noprefixroute

valid_lft forever preferred_lft forever故障模拟

# 结束主库服务

service mysql stop

# 查看从库127复制信息

show slave status\G;

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: 192.168.128.126查看新主库

# 虚拟ip是否漂移到126

[root@localhost ~]# ip addr

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:42:db:6d brd ff:ff:ff:ff:ff:ff

inet 192.168.128.126/24 brd 192.168.128.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::e860:35ea:24d2:c7ee/64 scope link noprefixroute

valid_lft forever preferred_lft forever监控服务器部分日志

----- Failover Report -----

mha: MySQL Master failover 192.168.128.125(192.168.128.125:3306) to 192.168.128.126(192.168.128.126:3306) succeeded

Master 192.168.128.125(192.168.128.125:3306) is down!

Check MHA Manager logs at localhost.localdomain:/data/mysql-mha/mha.log for details.

Started automated(non-interactive) failover.

Selected 192.168.128.126(192.168.128.126:3306) as a new master.

192.168.128.126(192.168.128.126:3306): OK: Applying all logs succeeded.

192.168.128.128(192.168.128.128:3306): OK: Slave started, replicating from 192.168.128.126(192.168.128.126:3306)

192.168.128.127(192.168.128.127:3306): OK: Slave started, replicating from 192.168.128.126(192.168.128.126:3306)

192.168.128.126(192.168.128.126:3306): Resetting slave info succeeded.

Master failover to 192.168.128.126(192.168.128.126:3306) completed successfully.故障汇总

主从复制故障

故障一

-- 执行show slave status 出现如下错误

Last_IO_Error: Got fatal error 1236 from master when reading data from binary log: "Slave has more GTIDs than the master has, using the master's SERVER_UUID. This may indicate that the end of the binary log was truncated or that the last binary log file was lost, e.g., after a power or disk failure when sync_binlog != 1. The master may or may not have rolled back transactions that were already replica"

-- 解决

stop slave;

reset slave;

reset master;

-- 重新执行change master to故障二

-- 从库上执行show slave status出现错误

Error 'Operation CREATE USER failed for 'qvbilam_copy'@'192.168.128.%'' on query. Default database: ''. Query: 'CREATE USER 'qvbilam_copy'@'192.168.128.%' IDENTIFIED WITH 'mysql_native_password' AS '*B51AF62AFB7D7503BFE3A5BF7F4A06AABE9463FB''

-- 解决,哪台从库出问题在哪执行,不要在主库执行

drop user qvbilam_copy@'192.168.128.%';

FLUSH PRIVILEGES;

stop slave;

start slave;软件包故障

故障一

# rpm安装manager出现错误

rpm -ivh mha4mysql-manager-0.58-0.el7.centos.noarch.rpm

# 出现缺依赖错误

错误:依赖检测失败:

mha4mysql-node >= 0.54 被 mha4mysql-manager-0.58-0.el7.centos.noarch 需要

perl(Log::Dispatch) 被 mha4mysql-manager-0.58-0.el7.centos.noarch 需要

perl(Log::Dispatch::File) 被 mha4mysql-manager-0.58-0.el7.centos.noarch 需要

perl(Log::Dispatch::Screen) 被 mha4mysql-manager-0.58-0.el7.centos.noarch 需要

perl(MHA::BinlogManager) 被 mha4mysql-manager-0.58-0.el7.centos.noarch 需要

perl(MHA::NodeConst) 被 mha4mysql-manager-0.58-0.el7.centos.noarch 需要

perl(MHA::NodeUtil) 被 mha4mysql-manager-0.58-0.el7.centos.noarch 需要

perl(MHA::SlaveUtil) 被 mha4mysql-manager-0.58-0.el7.centos.noarch 需要

perl(Parallel::ForkManager) 被 mha4mysql-manager-0.58-0.el7.centos.noarch 需要

# 解决 - 缺啥安啥,缺少MHA相关的执行MHA-node安装即可

yum install -y perl-Parallel-ForkManager.noarch perl-Log-Dispatch* gd-devel

# ok

rpm -ivh mha4mysql-manager-0.58-0.el7.centos.noarch.rpm

准备中... ################################# [100%]

正在升级/安装...

1:mha4mysql-manager-0.58-0.el7.cent################################# [100%] 故障二

# 执行masterha_check_rep报错

[error][/usr/share/perl5/vendor_perl/MHA/ServerManager.pm, ln301] install_driver(mysql) failed: Attempt to reload DBD/mysql.pm aborted.

Compilation failed in require at (eval 26) line 3.

at /usr/share/perl5/vendor_perl/MHA/DBHelper.pm line 205

at /usr/share/perl5/vendor_perl/MHA/Server.pm line 166

Tue Feb 11 02:39:23 2020 - [error][/usr/share/perl5/vendor_perl/MHA/ServerManager.pm, ln309] Got fatal error, stopping operations

Tue Feb 11 02:39:23 2020 - [error][/usr/share/perl5/vendor_perl/MHA/MasterMonitor.pm, ln424] Error happened on checking configurations. at /usr/share/perl5/vendor_perl/MHA/MasterMonitor.pm line 326

Tue Feb 11 02:39:23 2020 - [error][/usr/share/perl5/vendor_perl/MHA/MasterMonitor.pm, ln523] Error happened on monitoring servers.

Tue Feb 11 02:39:23 2020 - [info] Got exit code 1 (Not master dead).

MySQL Replication Health is NOT OK!

# 安装cpan

yum install -y cpan

# 执行cpan 回答yes / sudo

cpan

# 到了等待输入的地方执行

cpan[1]> force install GD

cpan[2]> exit

# 执行安装

cpan DBD::mysql

# 如出现错误,就是缺啥补啥,带上*暴力安装.就不信还缺少东西

yum install -y perl-Devel*MHA故障

故障一

# 手动停掉主库mysql服务后 没有主从切换.mha服务挂掉,且日志报错

Tue Feb 11 22:21:58 2020 - [error][/usr/share/perl5/vendor_perl/MHA/MasterFailover.pm, ln310] Last failover was done at 2020/02/11 19:56:06. Current time is too early to do failover again. If you want to do failover, manually remove /data/mysql-mha/mha.failover.complete and run this script again.

Tue Feb 11 22:21:58 2020 - [error][/usr/share/perl5/vendor_perl/MHA/ManagerUtil.pm, ln177] Got ERROR: at /usr/bin/masterha_manager line 65.

# 切换频繁,8小时内切换就会出现这个问题,可以手动删除文件

rm /data/mysql-mha/mha.failover.complete

# 根据错误提示的mha.failover.complete路径删除,因为个人设定的工作目录可能不一样故障二

# 发送邮件脚本导致mha服务挂掉,mha错误日志

Wed Feb 12 00:25:31 2020 - [info] Sending mail..

Unknown option: conf

Can't call method "MailMsg" without a package or object reference at /etc/mha/scripts/send_report line 66.

Wed Feb 12 00:25:51 2020 - [error][/usr/share/perl5/vendor_perl/MHA/MasterFailover.pm, ln2089] Failed to send mail with return code 2:0

# 缺少Mail::Sender

yum install -y cpan # 如有安装跳过这部

yum install -y perl-Getopt*

cpan

# 再次执行测试,如果不支持Mail安装

cpan[1]> install Email:Simple

cpan[2]> install Email:Sender:Simple

cpan[3]> IO::Socket::SSL

cpan[4]> Email::Sender::Transport::SMTP::TLS

cpan[5]> Net::SMTP::TLS::ButMaintained

cpan[6]> quit

# 使用Email模块